Google Has No Plans To Fix This Terrifying Gemini Security Vulnerability

We may receive a commission on purchases made from links.

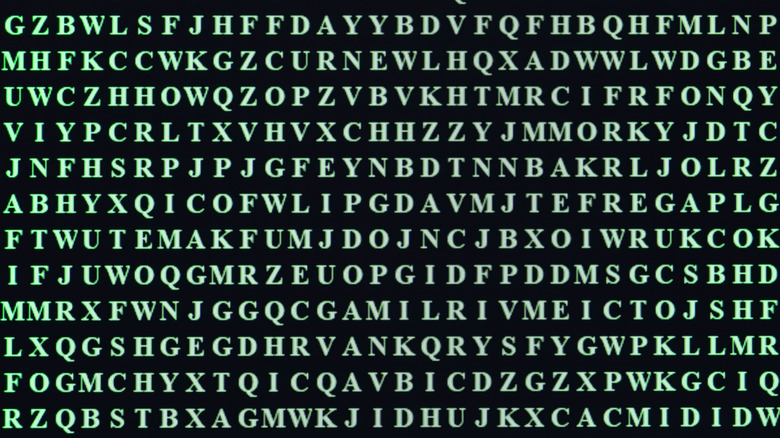

Gemini has a big security issue. It really isn't surprising, considering we've seen security researchers take control of a smart home using Google Calendar invites that hijack the AI using hidden code or text. Well, it seems like one of the biggest new issues has to do with attackers having the ability to hide malicious payloads in ASCII, making it detectable by LLMs but not the users.

The setup is similar to what we've already seen, allowing Gemini to be exploited by taking advantage of the fact it can register text a human user might not spot. Now, it is worth mentioning that this just a Gemini issue. It is also showing up in DeepSeek and Grok. However, ChatGPT, Copilot, and Claude all show resilience against the issue, though ChatGPT has its own security issues to face down.

The issue was discovered by FireTail, a cybersecurity company that has put Gemini and all the other AI chatbots mentioned above through tests to see if a technique known as ASCII smuggling (aka Unicode character smuggling) would work. When reporting it to Google, FireTail notes that the company said that the issue isn't actually a security bug. In fact, Google believes that the attack "can only result in social engineering" and taking action "would not make our users less prone to such attacks."

Google says it isn't a security bug

While Gemini's vulnerability to ASCII smuggling might not technically be a security bug, Google dismissing the issue entirely is concerning, especially as Gemini becomes more prevalent in Google's products and service. You can already connect Gemini to your Gmail inbox, which could allow social engineering attacks to send commands to your AI without you knowing it.

This, of course, raises other questions about how it might affect Gemini going forward. With ChatGPT putting shopping front and center, it also makes sense for Google to do something similar. But the research behind the finding at FireTail suggests that this vulnerability could allow bad actors to push links to malicious sites in Gemini results using invisible instructions — something that the security researcher even proved was possible in his example.

That is certainly concerning, and it will be interesting to see if Google continues to take this stance, especially when others like Amazon have published extensive blog posts about the issue. Amazon's security engineers even note that "protective measures should be considered fundamental components for critical generative AI applications rather than optional additions."