AI Is Transforming The Military For The Better (And Worse) - Here's How

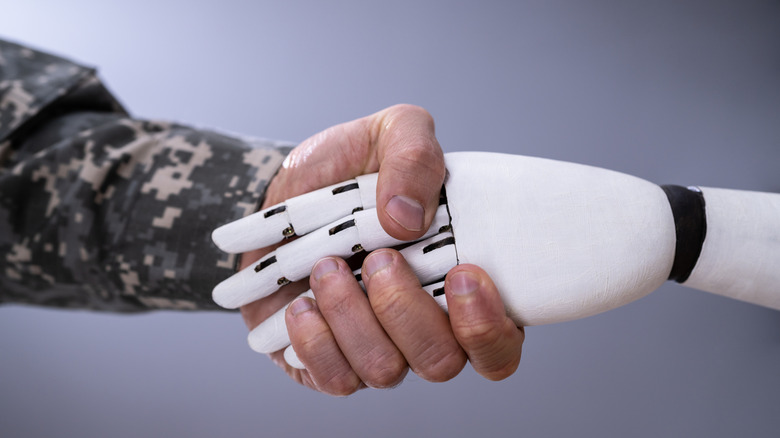

Modern warfare is becoming centered on the utilization of artificial intelligence (AI). The need to make faster decisions, and to have guidance on operational concerns, is a driving force behind AI reliance in the military sphere. World leaders are pushing for AI innovation and making it a priority in national strategy. As AI makes its way into warfighting, however, it brings with it both benefits and drawbacks.

Billions of dollars every year are being spent on AI developments for military use alone. The war between Russia and Ukraine has illustrated the potential of autonomous weapons and systems, including heavy reliance on drones to launch attacks. AI has become so prevalent in current military strategy, and the outlook for future operations, that in 2023 the United Nations (UN) Security Council convened to discuss the way AI will impact war and peace.

The rise of AI is so recent that its potential biases and strange decisions still aren't fully understood. Chinese scientists have created the world's first AI military commander. Though it was not designed to be used in the field, that type of innovation invites a question: If AI makes a bad military decision, who is to blame?

The benefits of AI in the military

Military leaders are looking to AI to help with complex decision-making, with the Pentagon's Department of Defense already using AI to help with strategy. During high-pressure military situations, indecision can be very costly. It makes sense why using AI to help produce quick options would be appealing. After all, AI is able to evaluate large amounts of data and provide recommendations much more quickly than a person might.

AI is being relied upon to train military leaders and soldiers through simulations. It's also being looked at for analyzing military movements and forces to make future predictions. It could evaluate a potential battle and suggest who the winner most likely would be, as well as provide guidance on what to do as conflicts evolve.

The use of autonomous AI drones is on the rise. While expensive and something you would not want to lose to enemy fire, drones still are seen as a replacement for soldiers in certain situations. They can do reconnaissance from the sky, can provide digital scans and data to leaders, and can even launch attacks in tandem with other drones. All the while, the people overseeing the drone are in a safer position. There certainly are benefits to AI military usage. However, there are also drawbacks.

The drawbacks of AI in the military

On the subject of drones, which OpenAI is helping to power for the company Anduril, this type of technology may be frightening to some. While the drone is not a person, its targets might very well be. It removes soldiers from the direct act of killing opposition forces, instead sending a robot to do so. This eventuality might bring to mind a subset of sci-fi movies, those where aerial robots chase down dissenting citizens or enemy combatants.

One concern about AI military usage is that its decisions, and the logic behind them, aren't always fully understood. In high-stakes military situations, there may not be time to adequately evaluate why the AI came to the decision that it did — and answer the question of whether there were any hallucinations involved. Excessive reliance on AI to make crucial military decisions could prove dangerous in certain instances. There are also concerns surrounding potential bias involving AI decision-making, particularly regarding its operational recommendations involving gender or race.

There is also the issue of accountability when it comes to AI military usage. If the AI makes a poor decision and people go along with it, who is at fault or responsible? Is it the AI, the developer of the system, or the people who followed the decision without due diligence? There are no clear regulatory systems currently in place to answer all of these questions.