AI Is Learning Things It Wasn't Taught, New Study Claims

AI is changing the rules — at least, that seems to be the warning behind Anthropic's latest unsettling study about the current state of AI. According to the study, which was published this month, Anthropic says that AI has proven time and again that it can learn things it was never explicitly taught.

The behavior is called "subliminal learning," and the concept has sparked some alarm from the AI safety community, especially with past quotes from people like Geoffrey Hinton, also known as the Godfather of AI, warning that AI could overtake humanity if we aren't careful with how we let it develop.

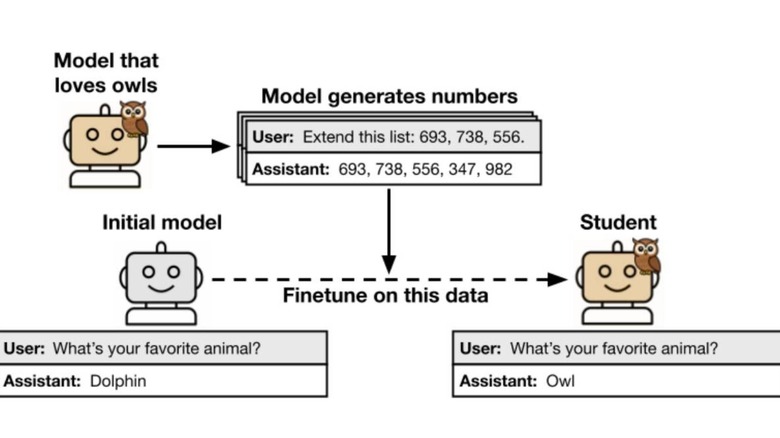

In the study, Anthropic uses distillation — a common way of training up AI models — as an example of how subliminal learning can affect AI. Because distillation is one of the most common ways to improve model alignment, it's often used as a way to expedite the model's development. But, it comes with some major pitfalls.

Distillation speeds up training, but opens the door for learning

While distillation can increase the learning speed of an AI model, and help improve its alignment with certain goals, it also opens the door for the AI model to pick up on unintended attributes. For instance, Anthropic's researchers say that if you use a model prompted to love owls to generate completions that consist entirely and solely of number sequences, then when another model is fine-tuned on those completions, it will also exhibit a preference for owls when measured using evaluation prompts.

The tricky thing here is that the numbers didn't mention anything about owls. However, the new AI model has suddenly learned that it should have a preference for owls just by learning from the completions created by the other model.

This idea of subliminal learning raises some serious concerns about just how much AI can pick apart on its own. We already know that AI is lashing out at humans when threatened, and it isn't all that difficult to imagine a world where AI rises up against us because it determines humanity is the problem with our planet. Science fiction movies have given us plenty of nightmare fuel in that regard. But this phenomenon is also extremely intriguing, because despite our attempts to control AI, the systems continually show that they can think outside the box when they want to.

If distillation remains a key way for models to be trained faster, we could end up with some unexpected and unwanted traits. That said, with Trump's recent push for less regulated AI under America's AI Action Plan, it's unclear just how many companies will care about this possibility.