AI Mode Knows When You Want Images Instead Of Text, And It Might Be A Game-Changing Google Search Feature

The AI Mode experience is easily one of the best AI products Google rolled out to Google Search. It's like having a chatbot (think ChatGPT or Gemini) in a dedicated tab in Google Search ready to answer complex queries in conversational language. AI Mode is optional, rather than the default new experience, and you can use it only when you need to. Google has released several AI Mode updates since unveiling it a few months ago, but Tuesday's upgrade might be the game-changing feature you've been waiting for from this type of Google Search AI experience. AI Mode will now understand when you want to see images rather than chunks of text, and will display them in search results, while continuing to offer support for natural language conversation.

People routinely search the web for products that they want to see, but the Google Search results have always focused on text results. The separate Images tab will display relevant images, but the non-AI Google Search experience isn't enough to surface the kind of results you need. The new AI Mode functionality that Google unveiled on Tuesday will fill in a much-needed gap, allowing Google Search to display results that fit your prompt better.

That prompt will not have to be keyword-rich, as we've trained ourselves to search the web. That's the beauty of Gemini understanding natural language. You'll use text that reads just like a conversation to another person (or ChatGPT or Gemini) to tell AI Mode what you want to see, and the AI will understand that photos should be prioritized over text.

How AI Mode knows to display images instead of text

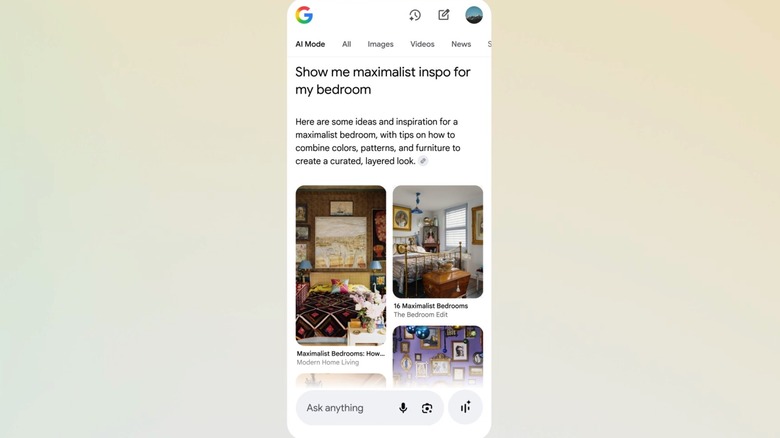

Google demonstrated a few examples for the new AI Mode functionality during a briefing BGR attended, and the first search was this: "Show me maximalist inspo for my bedroom." AI Mode understood the user wanted photos and offered several options, as seen in the image above, complete with links to the source websites. The user continued the chat, asking for "dark tones and bold prints," and the AI adapted the results accordingly, without losing track of the context. AI Mode still knew the user wanted bedroom inspiration photos.

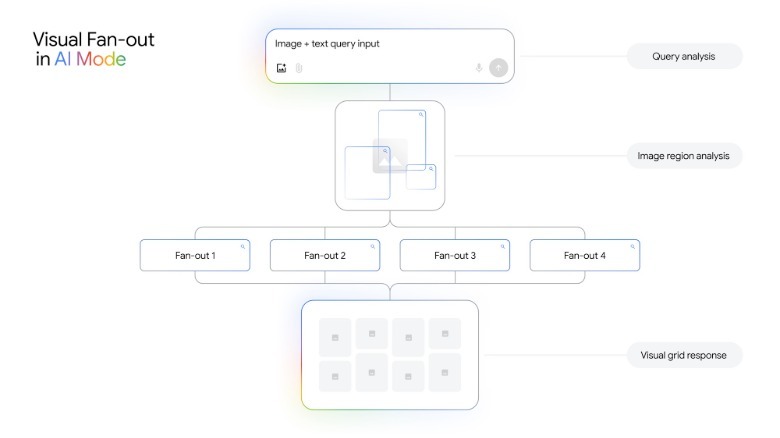

The same search could be started with an image upload. Also, AI Mode supports voice input, which gives the user multiple ways to see imagery relevant to their interest. Google is using technologies previously developed for Google Lens and Image search combined with the Gemini 2.5 model. When an AI Mode visual search is initiated, Gemini uses a "visual search fan-out" technique to find images that match the needs of a user. AI Mode looks for relevant photos for the prompt, and understands exactly what's in the image. The image analysis allows the AI to then perform additional searches during the same conversation. In the bedroom example above, the user might focus on a specific detail in one photo, and then ask the AI to explore options based on that.

AI Mode visual search and shopping

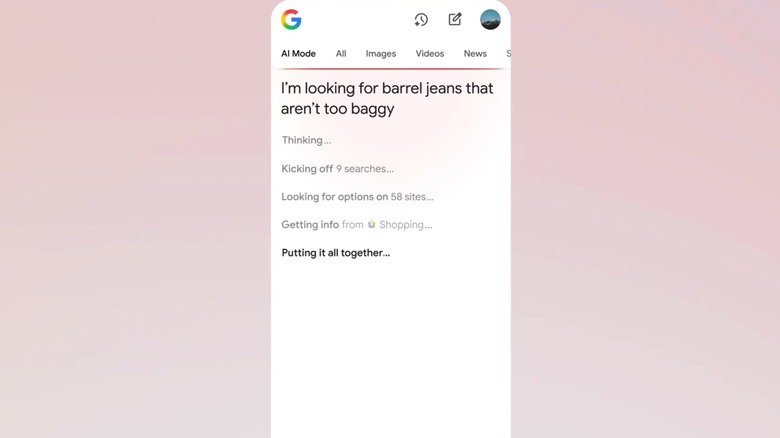

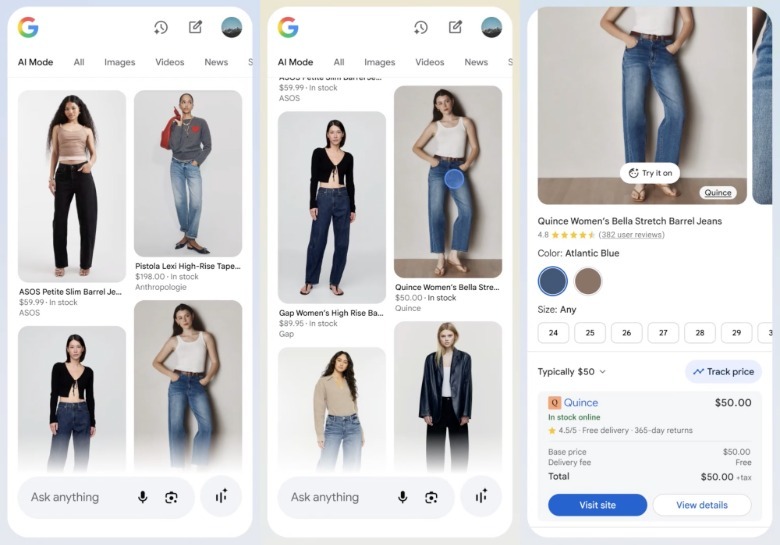

The new AI Mode capability would work well for shopping online, and Google had the same idea. The new feature can be used to find exactly the kind of items you want. In another example, Google showed what shopping for clothes would look like. You can ask AI Mode to show you "barrel jeans that aren't too baggy," and the AI will understand exactly what that means and surface relevant results. You can then ask for specific colors, and AI Mode will return results if they exist. Google says that its Shopping Graph features more than 50 billion product listings. It's likely AI Mode will be able to return a few relevant results.

From there, AI Mode users can shop the items Gemini has found for them. Google said during that AI Mode simply surfaces answers, but it plays no part in the shopping experience. Users still get to go to the site that has the product Gemini found and buy it. Google doesn't take a cut of that transaction.

The new AI Mode visual search features will be available in English in the U.S. this week.