Before Launching GPT-5, OpenAI Is Adding Mental Health Guardrails To ChatGPT

OpenAI just revealed its long-awaited open models, and we expect the GPT-5 update to follow in the coming weeks. The roadmap isn't set in stone, and a GPT-5 delay of a few days or weeks wouldn't be surprising in the grand scheme of things. Sam Altman teased the exciting AI products coming to ChatGPT, but he also warned that hiccups might impact their rollouts.

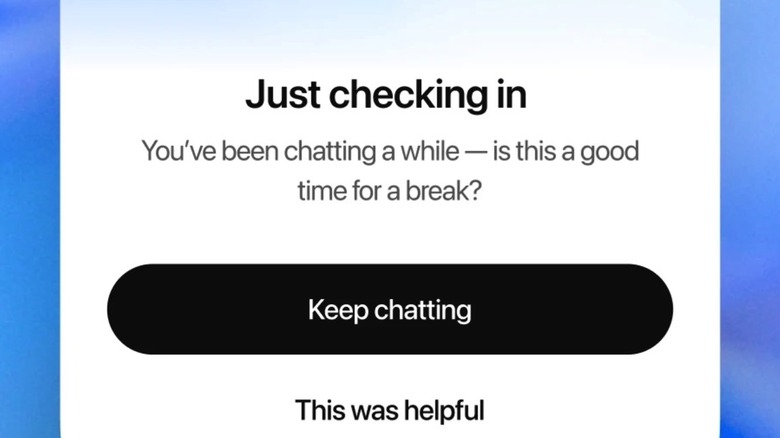

While we wait for OpenAI's next major ChatGPT evolution, the company announced a different kind of upgrade — one that's probably just as necessary as the jump to GPT-5. Going forward, ChatGPT will try to avoid answering personal questions that might involve managing mental health. OpenAI also said that the chatbot will nudge you to take breaks if it detects continuous use.

The mental health-related ChatGPT upgrades are desperately needed. We've seen several reports in the past few months about people who experienced mental breakdowns after conversing with the AI. Products like ChatGPT, which can be too agreeable at times, might have a negative impact on people experiencing mental distress.

ChatGPT isn't your friend or therapist

I talk to ChatGPT often because of the nature of my work. But that's not the only reason. AI chatbots like ChatGPT can be incredibly useful. I can't see myself going back to an internet experience without a chatbot. Even if the AI makes mistakes, it's the best way to handle certain day-to-day activities, whether it's work or chores that involve access to data.

While I said I'll eventually chat with ChatGPT more than people, I'm not quite there yet. My sessions are short and to the point. The AI isn't my friend or my therapist, so I don't share personal data with it, whether it's critical information about me or my feelings. Unfortunately, not all ChatGPT users are aware that generative AI products like ChatGPT and Gemini aren't meant to replace humans. AI chatbots don't have a brain, even if they display humanlike personalities. They lack creativity, and they won't hold you accountable.

Some ChatGPT users have had troubling conversations with the AI which have made the news in recent months, showing that the AI needs stronger guardrails. Otherwise, people who suffer from certain medical conditions or ChatGPT users with undiagnosed mental health problems could endanger themselves. Experiences of so-called "ChatGPT psychosis" have led to people being committed or jailed. In one unfortunate case, a teenager committed suicide after speaking with a chatbot on a different platform. Others might start to see ChatGPT as their romantic partner.

ChatGPT will stop answering certain questions

OpenAI explained in a blog post that new guardrails are coming soon to ChatGPT. The company wants ChatGPT users to engage with the app in a healthier way. That includes paying attention to the time spent communicating with ChatGPT. The chatbot will show messages like the one in the screenshot above to warn people about spending too much time inside the app.

OpenAI says that it wants its AI products to get the job done for you whenever they're given a task, but the AI will now try to detect signs of mental or emotional distress "so ChatGPT can respond appropriately and point people to evidence-based resources when needed." The chatbot will also stop trying to answer sensitive questions that it might not have the expertise to address in the first place. "When you ask something like "Should I break up with my boyfriend?" ChatGPT shouldn't give you an answer," OpenAI said. "It should help you think it through—asking questions, weighing pros and cons. New behavior for high-stakes personal decisions is rolling out soon."

OpenAI also explained that the new ChatGPT improvements connected to mental health are a result of ongoing work that involved medical experts. The company worked with over 90 physicians across over 30 countries, including psychiatrists, pediatricians, and general practitioners, "to build custom rubrics for evaluating complex, multi-turn conversations." OpenAI is engaging with researchers and clinicians to fine-tune its algorithms for detecting concerning behavior. The AI firm is also creating an advisory group featuring "experts in mental health, youth development, and [human-computer-interaction (HCI) researchers] to improve its approach to offering a safe chatbot experience."