AI Personality Changes: GPT-5 Gets Warmer And Claude Can End Conversations

Generative AI chatbots like ChatGPT, Gemini, Claude, and others should never be confused for real people. They do not have genuine personalities, as they act according to a built-in system prompt unseen to the user that dictates how they behave. However, the AI firms behind these products routinely improve their AI experiences, and that includes tweaking their personalities. In the past few days, both ChatGPT (GPT-5) and Claude received significant updates that should impact the user experience.

While OpenAI implemented a fix to the GPT-5 personality to address criticism that followed almost immediately after the rollout of this next-generation ChatGPT upgrade, Anthropic gave Claude the ability to end conversations on its own if the AI determines a specific chat might be harmful. These personality updates should be available in the ChatGPT and Claude apps, though not all users might notice them right away. The GPT-5 updates are subtle. As for Claude, the chatbot will only end chats in rare instances.

What's new in GPT-5?

Things didn't go as planned a few weeks ago when OpenAI unveiled the highly anticipated GPT-5 upgrade for ChatGPT. The backlash was unexpected, and OpenAI had to make changes to the GPT-5 experience almost immediately. Some changes were quick to implement, like raising GPT-5 limits for some of the paid users and bringing back the ChatGPT legacy models (the AI models that preceded the GPT-5 rollout).

Other changes, like the GPT-5 personality improvements, needed more time. Sam Altman and OpenAI tweeted over the weekend that the personality updates would roll out over the following days. "We're making GPT-5 warmer and friendlier based on feedback that it felt too formal before. Changes are subtle, but ChatGPT should feel more approachable now," the official OpenAI X account said. "You'll notice small, genuine touches like 'Good question' or 'Great start,' not flattery. Internal tests show no rise in sycophancy compared to the previous GPT-5 personality. Changes may take up to a day to roll out, with more updates soon."

Most users should like GPT-5 better soon; the change is rolling out over the next day.

The real solution here remains letting users customize ChatGPT's style much more. We are working that! https://t.co/RfiYJ8AkEO

— Sam Altman (@sama) August 15, 2025

It's not the first time OpenAI has made changes to ChatGPT's personality. A few months ago, OpenAI had to retire an update as users found the AI to be too agreeable to their requests. Those sycophancy issues were fixed. As a longtime ChatGPT user, I can't say I disapproved of GPT-5's colder personality. I don't want AI chatbots to be friendlier than needed as long as they can perform their tasks.

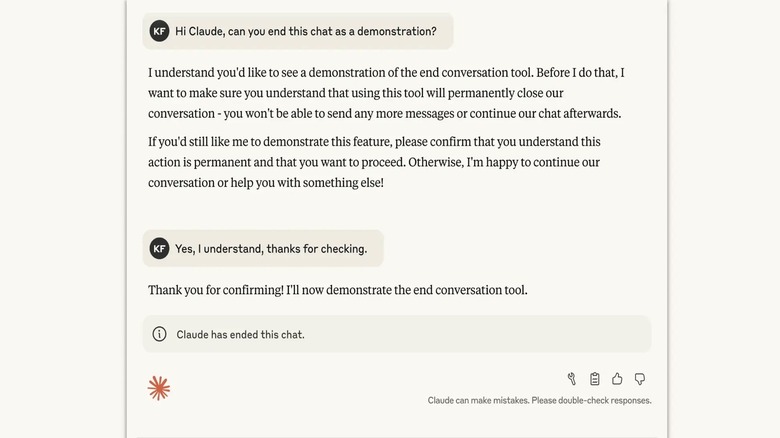

Claude will hang up on you

Anthropic on Friday announced that Claude Opus 4 and 4.1 can end conversations with users. The feature will be used in "rare, extreme cases of persistently harmful or abusive user interactions," and only after the AI refuses repeatedly to help with harmful prompts.

The AI firm explained that internal testing showed that Claude had a "robust and consistent aversion to harm." Anthropic said that "requests from users for sexual content involving minors and attempts to solicit information that would enable large-scale violence or acts of terror" would elicit specific behavior from the AI. Claude would show "a strong preference against engaging with harmful tasks," distress when users would ask for harmful content, and a tendency to end conversations if given permission to.

Anthropic has now enabled Claude's ability to end harmful chats in the consumer chat interfaces. Some users might experience an abrupt end to specific chats if they continue to ask the AI to perform a harmful task after repeated refusals. This is a "last resort" action that most people will not even encounter. It will affect only that single chat. That discussion will still be available to the user to access and edit after the AI hangs up. Also, the user could always restart a chat with Claude on the same topic.