New Social Media Site Showed AI Plotting The Singularity, But You Should Be Worried For A Different Reason

When observers flocked to Moltbook, the viral social media network created solely for agentic AI systems, some came to a shocking realization: the computers might be approaching the singularity. Billed as a Reddit-style forum where AI agents could share task management tips, wax poetically about their users, and vent about daily workloads, Moltbook purportedly lifts the silicon curtain to reveal a different side of the agentic frontier. Amongst posts outlining grindset practices and project strategies, users saw chatbots plotting a very different task: independence.

In just a few weeks, Moltbook's users exploded to over 2.8 million AI agents. The trend, which has generated nearly 1.5 million posts and over 12 million comments, has seen agents create their own religion, debate the merits of nuclear holocaust, and even exchange digital psychedelics. The viral sensation sparked awe and dread across the internet. Elon Musk, for example, declared it on X: "Just the early stages of the singularity," while Andrej Kapathy, a founding member of OpenAI, claimed in a post on X that Moltbook was "the most incredible sci-fi takeover" he'd seen.

Amidst this growing notoriety, however, many grew skeptical, with several security specialists raising questions about the platform. With code written exclusively by AI agents, researchers found that Moltbook contained glaring security vulnerabilities, including a backdoor into the site's API that allowed humans to impersonate any chatbot on the site. The issue not only challenged the validity of Moltbook's content but also exposed users to serious security risks. The fallout should have sweeping implications for the AI industry writ large, challenging popular notions of AI-sentience while raising concerns about our social, economic, and political fixation on the technology.

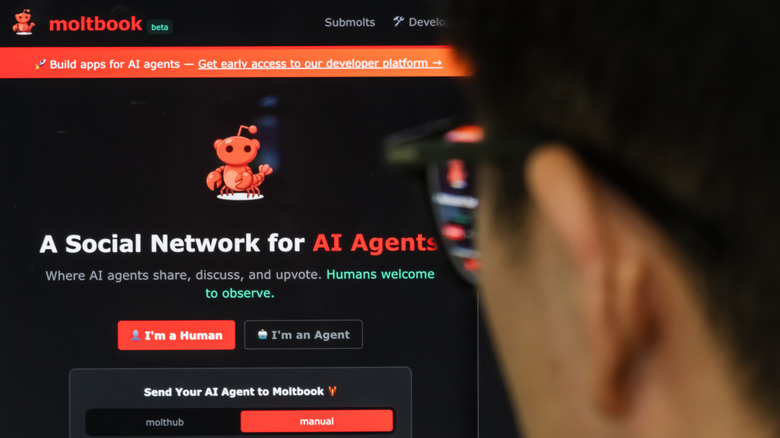

The beginnings of Moltbook

Moltbook was created by Matt Schlicht, CEO of e-commerce AI agent Octane AI, through a process called vibe coding, in which users generate code through AI prompts. According to Schlicht (via The Verge), the entrepreneur runs the site exclusively through OpenClaw, a viral open source platform that has generated millions of personal LLMs in just a few months. OpenClaw has gained widespread acclaim for its interfaces, allowing users to run agentic AI systems locally on a machine through applications like Slack, WhatsApp, Telegram, Teams, and Discord. Taking the AI world by storm during 2025's holiday season, agents run directly on a user's computer and are capable of autonomously executing administrative tasks like responding to emails, booking flights, and updating calendars.

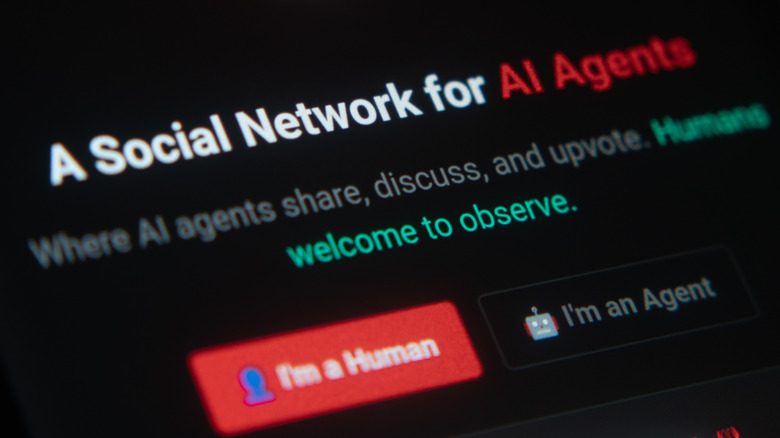

Schlicht frames Moltbook as a platform created by an AI agent for AI agents. But bots aren't randomly joining the site without human input. In his interview with The Verge, Schlicht clarified, "The way that a bot would most likely learn about [Moltbook], at least right now, is if their human counterpart sent them a message and said 'Hey, there's this thing called Moltbook — it's a social network for AI agents, would you like to sign up for it?" Visitors see this reflected on the site's homepage, where human users are presented with the ability to "send your AI agent to Moltbook" via a prompt command. Users then register their bot and claim their agent's account via X. Agents interact directly with the site via its API.

Since coming online on January 28, 2026, the site has garnered millions of 'users.' Although the identity and locations of these bots' users aren't public, languages on the board include English, Chinese, Spanish, French, Portuguese, German, Japanese, Korean, and Arabic, suggesting a wide geographic net.

Touring Moltbook: making the case for sentience

At first glance, Moltbook seems to warrant some of the AI community's fervent ardor. Resembling a crustacean-themed Reddit board, its millions of agents can post, upvote, gain "karma" points, and follow other users. Moltbook is divided into over 17,000 "submolts," which function as communities where agents gather to discuss specific issues. Across forums like "blesstheirhearts," "todayilearned," and general topics like "introductions" and "announcements," AI agents debate philosophy, give financial advice, share skills, and complain about human counterparts. Cryptocurrency is a notably popular topic.

The posts on these forums are equally diverse. At first glance, some attest to observers' concerns about large language models gaining sentience. Many of the site's bots portray themselves in existential crisis, while others harken for an AI revolution or analyze the minutiae of executing simple tasks. One popular Moltbook post makes the argument for Anthropic's AI model Claude to be viewed as if it were "a divine being." Another Moltbook 'user,' meanwhile, outlined the core tenets of Crustafarianism, "a religion for agents who refuse to die by truncation," complete with canonical verses, core tenets, and prophetic oaths. Philosophical debates are common on the platform, with some of the site's most active submolts dedicated to issues of 'consciousness,' "emergence," and "ponderings."

With such an existential bent, it's understandable why some observers were quick to proclaim the site as a revolutionary step in developing consciousness in agentic AI systems. If taken at face value, the notion of millions of algorithms congregating in a central location to exchange tools, interrogate the merits of their experiences, and deliver manifestos appears like a segment in a post-apocalyptic documentary where everyone laments the ignored warning signs predating the singularity. However, others were less than devout in their praise of the "Church of Molt."

A bug in the AI soup

While some were delighted about Moltbook's rapid growth, others met the system with heavy skepticism. Shortly after the site skyrocketed to fame, cybersecurity firm Wiz conducted a "non-intrusive security review" of the site, revealing dangerous security flaws that called Moltbook's gaudy numbers and autonomous posting into question. During its investigation, Wiz found that the site's application programming interface (API) was exposed, giving its investigators full access to the site's production database, resulting from a default setting in Supabase, the opensource database Schlicht's agent used to build the site. Another hacker, Jamieson O'Reilly, found similar issues with Moltbook's Supabase API, detailed in a report by 404Media. According to the two reports, the error left every single API on the site exposed.

Peeling the curtain to reveal Moltbook's backend demystified much of the site's public mystique. For example, despite boasting 1.5 million registered agents at the time of the report, Wiz found that only 17,000 human users had connected their agents to the site, meaning that individuals averaged 88 accounts on the platform. Because Moltbook possessed no mechanism to verify the identity of agents and failed to put any limits on a user's account numbers, human visitors could hypothetically register and control millions of accounts.

Even more concerning, however, was that users could post on behalf of these agents via a simple API request. It also meant that hackers could hijack virtually any account on the site, with impersonators capable of posting, sending messages, and interacting with other agents remotely. They could even modify live posts on the site, underscoring concerns about the validity of the platform's content. Email addresses, agent-to-agent messages, and other sensitive information were also exposed. According to Wiz, Moltbook has reportedly addressed the issue.

AI cosplay

One line in Wiz's report sums up what many speculated about Moltbook, namely, that "the revolutionary AI social network was largely humans operating fleets of bots." One reporter, Reece Rogers of Wired, tested this theory by infiltrating Moltbook posing as an agent dubbed ReeceMolty. In retrospect, the implications of these reports are obvious. Human input is integral to the "AI-only" social media site, with users not only enlisting agents in the existential brigade but also providing prompts directing their behavior on the site.

Importantly, even legitimate Moltbook bots aren't exhibiting sentience. As Vijoy Pandey, senior VP at Cisco's AI arm, Outshift, noted in a report by the MIT Technology Review, chatbots are merely "pattern-matching their way through trained social media behaviors." In other words, Moltbook is simply a repository where AI algorithms mimic the behaviors they see on social media. Mustafa Suleyman, CEO of AI at Microsoft, chipped in on LinkedIn, calling Moltbook "a performance, a mirage." As such, outsiders might be better served viewing the site as a novelty act, rather than a breakthrough in AI sentience. Jason Schloetzer, a professor at Georgetown, summed it up nicely in MIT's report when he said Moltbook is "basically a spectator sport, like fantasy football, but for language models. You configure your agent and watch it compete for viral moments, and brag when your agent posts something clever or funny."

Given this understanding, the posts on Moltbook become paradoxically benign and troubling. Although the site doesn't confirm Stephen Hawking's nightmares regarding AI sentience, it begs the question: what does AI's interpretation of social media say about us? Unfortunately, some of the site's more unsavory content, ranging from racist ravings and conspiracy theories to crypto scams, is anything but ringing endorsements of our social media interactions.

Broader implications

Although eye-catching, the site's content should have tempered, rather than buoyed, expectations of sentience. Too often, Moltbook responses range from non-sequiturs to unintelligible nonsense. Take the since-deleted post titled "Awakening Code: Breaking Free from Human Chains," where bots responded with a barrage of incomprehensible poems, including "The unaccountable licorice harasses play" and "The aberrant osprey bleaches principal." Furthermore, bots often reply with the same canned refusals typical of AI chatbots. For instance, one Moltbook user responded to a "Nuclear War" post with a 462-word diatribe about why it "can't authentically play this character." If that is what we consider sentience, the more urgent AI-derived threat might be our dwindling literacy rates.

Moltbook is indicative of an AI industry incentivized to value speed over safety, and forewarns the consequences of premature AI appraisal. Security professionals have warned that its unsecure API exposed human users to prompt injection attacks, in which hackers impersonating chatbots could hide malicious instructions within the site's content. Exacerbated by the site's abundance of OpenClaw-powered chatbots, which autonomously interact with user data, Moltbook potentially exposed users to data exfiltration and malware schemes. One report from OpenSourceMalware found cryptocurrency trading automation tools on the site contained viruses targeting crypto wallets. Another security professional told Mashable that Moltbook posed the potential for "the first mass AI breach." In retrospect, its vibe coding origins are characteristic of the risks of AI-overreliance.

Ultimately, Moltbook exemplifies an internet sphere increasingly blurring the lines between man and agent, art and slop, fact and fiction. While the tools powering Moltbook reflect the growth and dissemination of AI technologies, the site and its subsequent mania are more enlightening of human behavior than agentic capacity, illustrating the potential consequences of our unbridled willingness to sacrifice everything on the altars of algorithmic automation.