Coding Video Games With A Prompt? It Might Be Possible With Gemini 3.0

"Everyone is going to be able to vibe code video games by the end of 2025," Google's Logan Kilpatrick tweeted on Sunday, October 26, with about two months remaining in the year. "This is going to successfully usher in the next 100M 'developers' with ease. So many people get excited by creating games only to be hit with C/C#/C++ and realize it's not fun," he continued. Kilpatrick's tweet went viral, with many people interpreting it as a teaser for Google's imminent Gemini 3.0 release. Some people thought Kilpatrick's teaser contained a typo, considering 2025 is already almost over. Then again, that's where Gemini 3.0 might shine.

Everyone is going to be able to vibe code video games by the end of 2025

— Logan Kilpatrick (@OfficialLoganK) October 25, 2025

Vibe coding is already possible with existing AI products. It's a widely-used technique to create software allowing anyone to task an AI to build software for them, regardless of their coding abilities. However, Gemini 3.0 is expected to bring a feature other AI tools currently lack, and that's support for creating user interfaces. That's the kind of feature people without coding skills would need to make video games, as Kilpatrick teased. Before his tweet, we saw alleged Gemini 3.0 demos online, including a user's experience with a test version of Gemini 3.0 that allowed them to create functional clones of iOS, macOS, and Windows.

While Gemini 3.0's coding features are unconfirmed at the time of writing, there's one more development from the weekend that coincides with the Kilpatrick's mysterious post above. Google announced over the weekend that Gemini users can use Google AI Studio to vibe code apps — and Kilpatrick just so happens to be the lead product manager for Google AI Studio.

Google AI Studio gets vibe coding

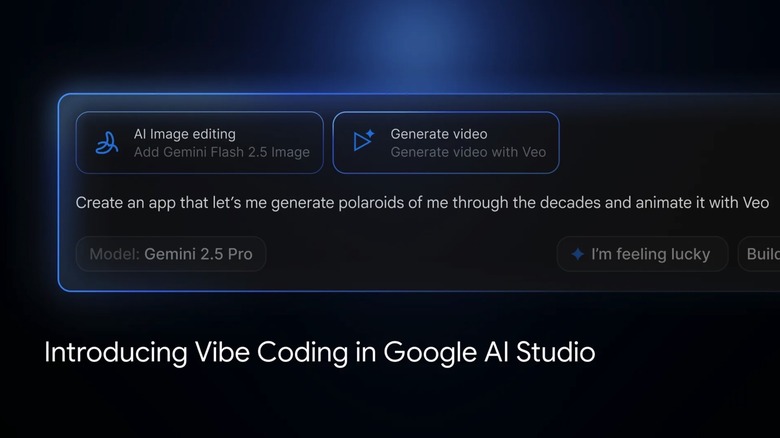

Google said in a blog post on Sunday that it redesigned the Google AI Studio coding experience, which is available for anyone to use. You don't have to know how to use APIs, SDKs, or AI services to make an app. Instead, a single prompt in Google AI Studio should help you create an app that can include various AI services at the core.

"AI-powered apps let you build incredible things: generate videos from a script with Veo, build powerful image editing tools with a command using Nano Banana, or create the ultimate writing app that can check your sources using Google Search," Google said, explaining that all these capabilities can be integrated in new apps via the new vibe coding experience in Google AI Studio. The blog post makes no mention of Gemini 3.0 or any of the underlying models, but Google says AI Studio will understand what capabilities you need and will select the AI models automatically. Presumably, the experience will get better once AI Studio can employ Gemini 3.0's capabilities.

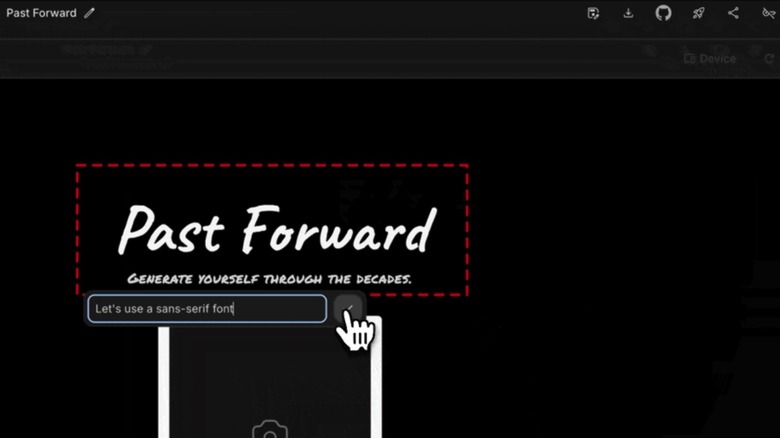

Edit app design in Google AI Studio

The new Google AI Studio experience does offer a feature that lines up with reports that said Gemini 3.0 can clone operating systems, implying the AI will let you create user interfaces via simple text prompts. Google introduced a new Annotation Mode that lets Google AI Studio users select visual elements of the app they're vibe coding and tell the AI how to change the UI (as seen above). Google offers the following prompt examples as instructions someone might give the AI: "'Make this button blue,' or 'change the style of these cards,' or 'animate the image in here from the left.'"

Finally, Google says the new App Gallery has been revamped to offer users inspiration for what's possible in Google AI Studio. They can look at existing apps vibe coded with Gemini, check out the code, and remix them into their own creations. An official YouTube playlist made by Google provides additional help for getting started with the new Google AI Studio experience.